Search This Blog

Monday, September 30, 2013

Data Mining: What is Data Mining?

Thursday, September 26, 2013

Import data from Excel to SQLServer

Many times we come across a situation where we have readily available excel sheets having enormous data that needs to be imported in database system for enhanced querying capabilities or as a backend datasource for some sort of software development.

We could achieve this goal either by copy-pasting the excel rows directly to the destined database table in SQL Server Management Studio or by querying directly on the excel sheet from SQL Server Management Studio itself!

The former way (copy-pasting) looks pretty simple but consider a situation where you are having 10K records in a single excel sheet and wanted to fetch some selective/filtered records only! At that time, the earlier way is much efficient and ideal.

I've created this sample excel file to import/retrieve the data in SQL server, and is also the file I will be using in this article. Here is the screenshot for the same.

OPENROWSET() to query excel files

Here, I've used the OPENROWSET() function to query excel files. This is a T-SQL function that can be used to access any OLE DB data source. All you need is the right OLE DB driver.

The following are the queries to retrieve data from Excel files.

We could achieve this goal either by copy-pasting the excel rows directly to the destined database table in SQL Server Management Studio or by querying directly on the excel sheet from SQL Server Management Studio itself!

The former way (copy-pasting) looks pretty simple but consider a situation where you are having 10K records in a single excel sheet and wanted to fetch some selective/filtered records only! At that time, the earlier way is much efficient and ideal.

I've created this sample excel file to import/retrieve the data in SQL server, and is also the file I will be using in this article. Here is the screenshot for the same.

|

| Excel sheet - Cars |

OPENROWSET() to query excel files

Here, I've used the OPENROWSET() function to query excel files. This is a T-SQL function that can be used to access any OLE DB data source. All you need is the right OLE DB driver.

The following are the queries to retrieve data from Excel files.

--Excel 2007-2010

SELECT * --INTO #Cars

FROM OPENROWSET('Microsoft.ACE.OLEDB.12.0',

'Excel 12.0 Xml;HDR=YES;Database=C:\cars.xlsx','SELECT * FROM [cars$]');

SELECT * --INTO #Cars

FROM OPENROWSET('Microsoft.ACE.OLEDB.12.0',

'Excel 12.0 Xml;HDR=YES;Database=C:\cars.xlsx','SELECT * FROM [cars$]');

--Excel 97-2003

SELECT * --INTO #Cars

FROM OPENROWSET('Microsoft.ACE.OLEDB.4.0',

'Excel 8.0;HDR=YES;Database=C:\cars.xls','SELECT * FROM [cars$]');

SQL Server blocked access to STATEMENT OpenRowset OpenDatasource of component Ad Hoc Distributed Queries

Error :

SQL Server blocked access to STATEMENT 'OpenRowset/OpenDatasource' of component 'Ad Hoc Distributed Queries' because this component is turned off as part of the security configuration for this server. A system administrator can enable the use of 'Ad Hoc Distributed Queries' by using sp_configure. For more information about enabling 'Ad Hoc Distributed Queries', see "Surface Area Configuration" in SQL Server Books Online.

Solution :

Solution for this common error in Sql Server is to enable the OpenRowset in Sql Server. We can do it by enabling theShowAdvanced Options and Ad hoc Distributed Queries. this error also appears when you execute some Adhoc queries in Sql Server Query analyzer. If you enable this for the first time, The further statements using this option will go fine without flaw.

To enable the ShowAdvanced Options, Please follow/use the below TSQL statements

SP_CONFIGURE 'SHOW ADVANCED OPTIONS', 1 GO RECONFIGURE GO

After executing the above staement if you try to execute or use OpenRowSet or any TSQL commands that requires the Ad Hoc Queries option to be enabled, you would get the below error

Msg 15281, Level 16, State 1, Line 1 SQL Server blocked access to STATEMENT 'OpenRowset/OpenDatasource' of component 'Ad Hoc Distributed Queries' because this component is turned off as part of the security configuration for this server. A system administrator can enable the use of 'Ad Hoc Distributed Queries' by using sp_configure. For more information about enabling 'Ad Hoc Distributed Queries', see "Surface Area Configuration" in SQL Server Books Online.

We can get Rid of the above error using the below TSQL commands

SP_CONFIGURE 'Ad Hoc Distributed Queries', 1 GO RECONFIGURE GO

Once the above TSQL statements are getting executed, you would be able to use the OpenRowSet Statement successfully. To Enable these two options you must have the sysadmin role. If you do not have this role, please contact your database administrator for doing this operation

Monday, September 16, 2013

Thursday, August 1, 2013

How To Create an ADO.NET Data Access Utility Class for SQL Server

| By: Ray Barley |

Problem

I am a .NET developer and I typically write applications that use a SQL Server database. I'm looking for a really simple, reusable class that encapsulates my ADO.NET database access code for create, read, update and delete (CRUD). As I see it I need two methods in the class: one that executes a stored procedure that returns a result set and another that executes a stored procedure that does an insert, update or a delete. Can you provide an example of how to do it?

Solution

While there are many code samples readily available to encapsulate ADO.NET database access, I prefer the simple, bare-bones approach that satisfies your requirements of a method that executes a query and another that executes a command. In this tip I will review a solution that has a class library for the database utility and a console application that uses the class library.

I will assume that the reader is familiar with creating .NET applications using Visual Studio.

Connection Strings

When you write ADO.NET code to access a database, you need a connection string to specify the database that you want to access. The connection string can be stored in your application's app.config file or web.config file (for a web application). The following is an example of an app.config file:

<?xml version="1.0" encoding="utf-8" ?>

<configuration>

<startup>

<supportedRuntime version="v4.0" sku=".NETFramework,Version=v4.5" />

</startup>

<connectionStrings>

<add name="mssqltips"

connectionString="data source=localhost;initial catalog=mssqltips;Integrated Security=SSPI;"

providerName="System.Data.SqlClient" />

</connectionStrings>

</configuration>

The following are the main points about the app.config file:

- mssqltips is the name of the connection string; we will use the name mssqltips to access the connection string

- Data source is the server name of the SQL Server database instance

- Initial catalog is the database name

- Integrated Security=SSPI means we are using windows authentication to connect to the database

- Provider name is the ADO.NET data provider for SQL Server

You can specify many more settings in the connection string than I have shown here. Take a look atSqlConnection.ConnectionString Property for the details.

Database Utility Class

I will use a class library project named DataAccessUtility to implement the database access utility class. When a class library is compiled it generates a dynamic link library (.DLL) which can then be referenced from any .NET application. The class library will have a single class named SqlDatabaseUtility with the following methods:

- GetConnection() opens a database connection

- ExecuteQuery() executes a stored procedure that performs a query

- ExecuteCommand() executes a stored procedure that performs an insert, update or delete

Before I get to reviewing the code in the methods, here are a couple of things that need to be done:

- Add a reference to System.Configuration to the class library project; I need this to access the connection string in the app.config file

- Add using statements to the SqlDatabaseUtility class for the namespaces System.Configuration, System.Data and System.Data.SqlClient; I am using classes from these namespaces

The GetConnection() method has the following code:

public SqlConnection GetConnection(string connectionName)

{

string cnstr = ConfigurationManager.ConnectionStrings[connectionName].ConnectionString;

SqlConnection cn = new SqlConnection(cnstr);

cn.Open();

return cn;

}

The main points about the GetConnection() method are:

- Reads the connection string from the app.config (or web.config) file

- Creates an instance of a SqlConnection object passing the connection string into the constructor

- Calls the Open() method on the SqlConnection object which "opens" a database connection

- Returns the SqlConnection object to the caller

The ExecuteQuery() method has the following code:

public DataSet ExecuteQuery( string connectionName, string storedProcName, DictionaryprocParameters ) { DataSet ds = new DataSet(); using(SqlConnection cn = GetConnection(connectionName)) { using(SqlCommand cmd = cn.CreateCommand()) { cmd.CommandType = CommandType.StoredProcedure; cmd.CommandText = storedProcName; // assign parameters passed in to the command foreach (var procParameter in procParameters) { cmd.Parameters.Add(procParameter.Value); } using (SqlDataAdapter da = new SqlDataAdapter(cmd)) { da.Fill(ds); } } } return ds; }

The main points about the ExecuteQuery() method are:

- Creates a Dataset that will be used to return the query results to the caller

- Calls the GetConnection() method to open a database connection

- Creates a SqlCommand object from the Connection, and sets the CommandType and CommandText properties

- Adds any parameters passed in to the SqlCommand parameter collection

- Creates a SqlDataAdapter for the SqlCommand, and calls the Fill method to execute the query and populate a dataset

- Returns the Dataset to the caller

- The SqlConnection, SqlCommand, and SqlDataAdapter objects are wrapped with a "using" statement which ensures that the objects are disposed; the caller is not responsible for "freeing" these objects

The ExeuteCommand() method has the following code:

public int ExecuteCommand(

string connectionName,

string storedProcName,

Dictionary<string, SqlParameter> procParameters

)

{

int rc;

using (SqlConnection cn = GetConnection(connectionName))

{

// create a SQL command to execute the stored procedure

using (SqlCommand cmd = cn.CreateCommand())

{

cmd.CommandType = CommandType.StoredProcedure;

cmd.CommandText = storedProcName;

// assign parameters passed in to the command

foreach (var procParameter in procParameters)

{

cmd.Parameters.Add(procParameter.Value);

}

rc = cmd.ExecuteNonQuery();

}

}

return rc;

}

The main points about the ExecuteCommand() method are:

- Calls the GetConnection() method to open a database connection; the using construct is used to close the database connection automatically

- Creates a SqlCommand object from the Connection and sets the CommandType and CommandText properties

- Adds any parameters passed in to the SqlCommand parameter collection

- Calls the SqlCommand ExecuteNonQuery method to call the stored procedure; the return value is the number of rows affected; e.g. the number of rows inserted, update or deleted by the command

- The SqlConnection and SqlCommand objects are wrapped with a "using" statement which ensures that the objects are disposed; the caller is not responsible for "freeing" these objects

Console Application

In this section I will review a .NET console application that will access a SQL Server database by using the SqlDatabaseUtility class. Here is a T-SQL script that creates a table, and two stored procedures - one that inserts a row and another that performs a query:

use mssqltips go create table [dbo].[customer] ( [id] [int] identity(1,1) NOT NULL, [name] [varchar](50) NOT NULL, [state] [varchar](2) NOT NULL, constraint [pk_customer] primary key clustered ([id] asc) ) go create procedure dbo.AddCustomer @name varchar(50) ,@state char(2) as begin insert into dbo.customer ([name], [state]) values (@name, @state) end go create procedure dbo.GetCustomerList as begin select [id], [name], [state] from dbo.customer end go

Before I get to reviewing the code in the console application, here are a couple of things that need to be done:

- Add a reference to the DataAccessUtility class library to the console application; I need this to call the methods in the SqlDatabaseUtility class

- Add a using statement for the DataAccessUtility, System.Data and System.Data.SqlClient namespaces

- Create an mssqltips database and run the above T-SQL script in it

- Put the connectionStrings element (shown in the Connection Strings section above) into the app.config file in the console application project

Here is the code to call the AddCustomer stored procedure:

SqlDatabaseUtility dbutility = new SqlDatabaseUtility();

// add a customer

Dictionary cmdParameters = new Dictionary();

cmdParameters["name"] = new SqlParameter("name", "Smith");

cmdParameters["state"] = new SqlParameter("state", "MD");

dbutility.ExecuteCommand("mssqltips", "dbo.AddCustomer", cmdParameters);

The main points about the above code are:

- Create an instance of the SqlDatabaseUtility class

- Create a Dictionary collection for parameters; it's like a name-value pair

- Add parameters to the collection; parameter names must match the stored procedure parameters

- Call the SqlDatabaseUtility ExecuteCommand method passing in the connection name, stored procedure name, and the parameter collection

Here is the code to call the GetCustomerList stored procedure:

Dictionary<string, SqlParameter> queryParameters = new Dictionary<string, SqlParameter>();

DataSet ds = dbutility.ExecuteQuery("mssqltips", "dbo.GetCustomerList", queryParameters);

DataTable t = ds.Tables[0];

foreach(DataRow r in t.Rows)

{

Console.WriteLine(string.Format("{0}\t{1}\t{2}",

r[0].ToString(),

r[1].ToString(),

r[2].ToString()));

}

The main points about the above code are:

- Create a Dictionary collection for parameters; even though the GetCustomerList does not take any parameters, you still have to pass an empty collection

- Call the SqlDatabaseUtility ExecuteQuery method passing the connection name, stored procedure name, and empty parameter collection

- ExecuteQuery returns a Dataset which is a collection of DataTables

- Get the first Datatable from the Dataset, iterate through the rows and print the column values to the console

Next Steps

- The above code is an example of a very simple approach to calling stored procedures from .NET code.

- To keep the code as simple as possible, there is no exception handling shown. You do need try/catch blocks around your database calls. Take a look at the SqlCommand methods to see the kinds of exceptions that you need to catch.

- Download the sample code here to experiment on your own.

Tuesday, July 30, 2013

SSIS Interview Questions - Part 1

| By: Arshad Ali |

Problem

When preparing for a SQL Server interview, it is helpful to understand what questions may be asked related to SSIS. In this tip series, I will try to cover as much as I can to help you prepare.

Solution

What is SQL Server Integration Services (SSIS)?

- SQL Server Integration Services (SSIS) is component of SQL Server 2005 and later versions. SSIS is an enterprise scale ETL (Extraction, Transformation and Load) tool which allows you to develop data integration and workflow solutions. Apart from data integration, SSIS can be used to define workflows to automate updating multi-dimensional cubes and automating maintenance tasks for SQL Server databases.

How does SSIS differ from DTS?

- SSIS is a successor to DTS (Data Transformation Services) and has been completely re-written from scratch to overcome the limitations of DTS which was available in SQL Server 2000 and earlier versions. A significant improvement is the segregation of the control/work flow from the data flow and the ability to use a buffer/memory oriented architecture for data flows and transformations which improve performance.

What is the Control Flow?

- When you start working with SSIS, you first create a package which is nothing but a collection of tasks or package components. The control flow allows you to order the workflow, so you can ensure tasks/components get executed in the appropriate order.

What is the Data Flow Engine?

- The Data Flow Engine, also called the SSIS pipeline engine, is responsible for managing the flow of data from the source to the destination and performing transformations (lookups, data cleansing etc.). Data flow uses memory oriented architecture, called buffers, during the data flow and transformations which allows it to execute extremely fast. This means the SSIS pipeline engine pulls data from the source, stores it in buffers (in-memory), does the requested transformations in the buffers and writes to the destination. The benefit is that it provides the fastest transformation as it happens in memory and we don't need to stage the data for transformations in most cases.

What is a Transformation?

- A transformation simply means bringing in the data in a desired format. For example you are pulling data from the source and want to ensure only distinct records are written to the destination, so duplicates are removed. Anther example is if you have master/reference data and want to pull only related data from the source and hence you need some sort of lookup. There are around 30 transformation tasks available and this can be extended further with custom built tasks if needed.

What is a Task?

- A task is very much like a method of any programming language which represents or carries out an individual unit of work. There are broadly two categories of tasks in SSIS, Control Flow tasks and Database Maintenance tasks. All Control Flow tasks are operational in nature except Data Flow tasks. Although there are around 30 control flow tasks which you can use in your package you can also develop your own custom tasks with your choice of .NET programming language.

What is a Precedence Constraint and what types of Precedence Constraint are there?

- SSIS allows you to place as many as tasks you want to be placed in control flow. You can connect all these tasks using connectors called Precedence Constraints. Precedence Constraints allow you to define the logical sequence of tasks in the order they should be executed. You can also specify a condition to be evaluated before the next task in the flow is executed.

- These are the types of precedence constraints and the condition could be either a constraint, an expression or both

- Success (next task will be executed only when the last task completed successfully) or

- Failure (next task will be executed only when the last task failed) or

- Complete (next task will be executed no matter the last task was completed or failed).

What is a container and how many types of containers are there?

- A container is a logical grouping of tasks which allows you to manage the scope of the tasks together.

- These are the types of containers in SSIS:

- Sequence Container - Used for grouping logically related tasks together

- For Loop Container - Used when you want to have repeating flow in package

- For Each Loop Container - Used for enumerating each object in a collection; for example a record set or a list of files.

- Apart from the above mentioned containers, there is one more container called the Task Host Container which is not visible from the IDE, but every task is contained in it (the default container for all the tasks).

What are variables and what is variable scope?

- A variable is used to store values. There are basically two types of variables, System Variable (like ErrorCode, ErrorDescription, PackageName etc) whose values you can use but cannot change and User Variable which you create, assign values and read as needed. A variable can hold a value of the data type you have chosen when you defined the variable.

- Variables can have a different scope depending on where it was defined. For example you can have package level variables which are accessible to all the tasks in the package and there could also be container level variables which are accessible only to those tasks that are within the container.

Monday, July 29, 2013

Import Excel unicode data with SQL Server Integration Services

| By: Greg Robidoux | Read Comments (41) | Related Tips: 1 | 2 | 3 | 4 | 5 | More > Integration Services Excel |

Problem

One task that most people are faced with at some point in time is the need to import data into SQL Server from an Excel spreadsheet. We have talked about different approaches to doing this in previous tips using OPENROWSET, OPENQUERY, Link Servers, etc... These options are great, but they may not necessarily give you as much control as you may need during the import process.

Another approach to doing this is using SQL Server Integration Services (SSIS). With SSIS you can import different types of data as well as apply other logic during the importing process. One problem though that I have faced with importing data from Excel into a SQL Server table is the issue of having to convert data types from Unicode to non-Unicode. SSIS treats data in an Excel file as Unicode, but my database tables are defined as non-Unicode, because I don't have the need to store other code sets and therefore I don't want to waste additional storage space. Is there any simple way to do this in SSIS?

Solution

If you have used SSIS to import Excel data into SQL Server you may have run into the issue of having to convert data from Unicode to non-Unicode. By default Excel data is treated as Unicode and also by default when you create new tables SQL Server will make your character type columns Unicode as well (nchar, nvarchar,etc...) If you don't have the need to store Unicode data, you probably always use non-Unicode datatypes such as char and varchar when creating your tables, so what is the easiest way to import my Excel data into non-Unicode columns?

The following shows two different examples of importing data from Excel into SQL Server. The first example uses Unicode datatypes and the second does not.

Here is what the data in Excel looks like.

Example 1 - Unicode data types in SQL Server

Our table 'unicode" is defined as follows:

CREATE TABLE [dbo].[unicode](

[firstName] [nvarchar](50) NULL,

[lastName] [nvarchar](50) NULL

) ON [PRIMARY]

[firstName] [nvarchar](50) NULL,

[lastName] [nvarchar](50) NULL

) ON [PRIMARY]

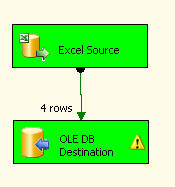

If we create a simple Data Flow Task and an Excel Source and an OLE DB Destination mapping firstname to firstname and lastname to lastname the import works great as shown below.

Example 2- non-Unicode data types in SQL Server

Our table 'non_unicode" is defined as follows:

CREATE TABLE [dbo].[non_unicode](

[firstName] [varchar](50) NULL,

[lastName] [varchar](50) NULL

) ON [PRIMARY]

[firstName] [varchar](50) NULL,

[lastName] [varchar](50) NULL

) ON [PRIMARY]

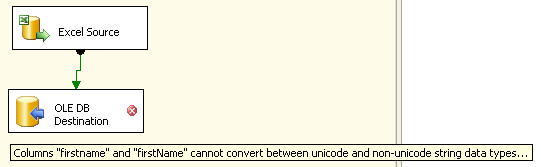

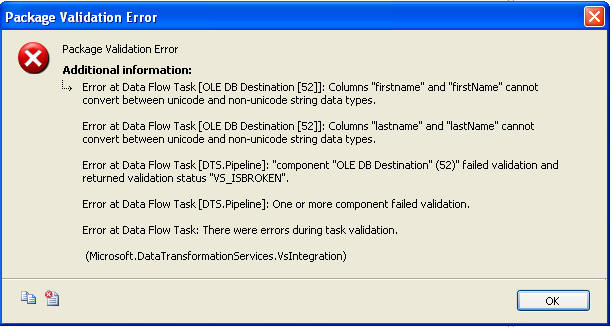

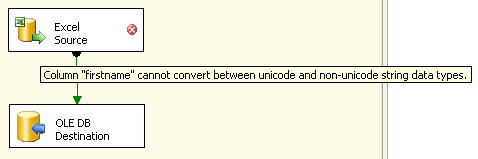

If we map the columns firstname to firstname and lastname to lastname we automatically get the following error in the OLE DB Destination.

Columns "firstname" and "firstname" cannot convert between unicode and non-unicode data types...

If we execute the task we get the following error dialog box which gives us additional information.

Solving the Problem

So based on the error we need to convert the data types so they are the same types.

If you right click on the OLE Destination and select "Show Advanced Editor" you have the option of changing the DataType from string [DT_STR] to Unicode string [DT_WSTR]. But once you click on OK it looks like the changed was saved, but if you open the editor again the change is gone and back to the original value. This makes sense since you can not change the data type in the actual table.

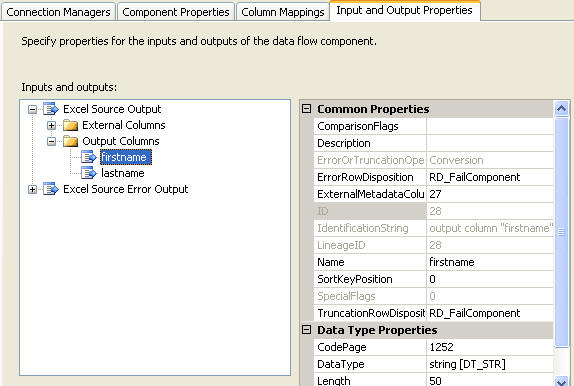

If you right click on the Excel Source and select "Show Advanced Editor" you have the option of changing the DataType from Unicode string [DT_WSTR] to string [DT_STR] and the change is saved.

If you click OK the change is saved, but now you get the error in the Excel Source that you can not convert between unicode and non-unicode as shown below. So this did not solve the problem either.

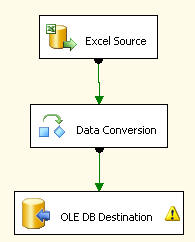

Using the Data Conversion Task

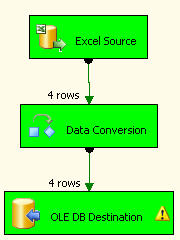

So to get around this problem we have to also use a Data Conversion task. This will allow us to convert data types so we can get the import completed. The following picture shows the "Data Conversion" task in between the Excel Source and the OLE DB Destination.

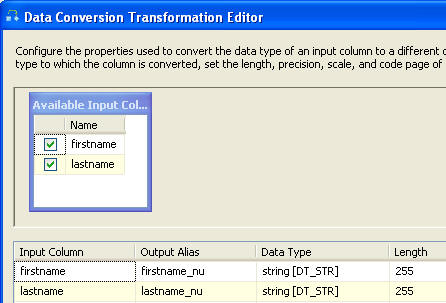

If you right click on "Data Conversion" and select properties you will get a dialog box such as the following. In here we created an Output Alias for each column.

Our firstname column becomes firstname_nu (this could be any name you want) and we are making the output be a non-unicode string. In addition we do the same thing for the lastname column.

If we save this and change the mapping as shown to use our new output columns and then execute the task we can see that the import was successful.

As you can see this is pretty simple to do once you know that you need to use the Data Conversion task to convert the data types.

Subscribe to:

Posts (Atom)